Artificial intelligence technologies are being applied by investigators that can identify and stop internet crimes against children (ICAC), according to a maker of AI tools for law enforcement, government agencies, and enterprises.

Voyager Labs, in a blog posted Tuesday, explained that many ICAC task forces are using tools like “topic query searches” to find online material related to crimes against children.

Those systems can find accounts trading child sexual abuse material, identify and locate the offenders using those accounts, identify potential victims, and compile crucial data to help law enforcement open a case against the criminals, Voyager noted.

It added that this process is often remarkably fast, sometimes returning usable results in seconds.

Another tool being used by investigators cited by Voyager is the “topic query lexicon.” It’s like a translation dictionary or code book. Criminal investigators can contribute their knowledge of criminal communications — slang, terminology, and emojis — to the lexicon, which AI software can use to find references to criminal activity online.

Voyager’s National Investigations Director Jason Webb, who contributed to the blog, explained that the lexicon acts as a shared database, enabling various types of specialists to apply one another’s knowledge to their research.

We Know Where You Live

Artificial intelligence is also useful in doing network analysis. If there are pages where child sexual abuse material has been traded, Voyager noted, AI can see who interacts with those pages to do network analysis, identifying if multiple offenders are involved in ICAC rings.

It is common for gangs to run ICAC activities and human trafficking, so these investigations have the potential to uncover criminal enterprises of complex organizations that work in a range of illegal activities, it explained.

Voyager added that some systems can reveal the geographic location of criminal activity. Sharing geo data may be one of the most significant factors in reducing ICAC, Voyager maintained, because it empowers agencies that have this new technology to share their research with smaller departments that may not have the same resources.

In addition to identifying criminal activity, Voyager noted that network analysis can be used to indicate potential victims. Once law enforcement and families are alerted that criminals have attempted to contact a child, Voyager explained, immediate steps can be taken to remove the child from potential harm.

Voyager claims that in many cases, all of this kind of AI research can be accomplished solely through open-source intelligence, which is available to the public with no warrant or special permissions. However, in the past, the company has gotten in hot water over its data collection practices.

In January 2023, Meta filed a lawsuit against Voyager Labs in U.S. federal court in California, alleging the company improperly scraped data from Facebook and Instagram platforms and profiles.

Meta claims Voyager created more than 38,000 fake accounts and used those to scrape 600,000 Facebook users’ “viewable profile information.” Voyager allegedly marketed its scraping tools to companies interested in conducting surveillance on social media sites without being detected and then sold the information to the highest bidder.

Voyager contends that the lawsuit is meritless, reveals a fundamental misunderstanding of how the software products at issue work, and, most importantly, is detrimental to U.S. and global public safety.

Filtering Nude Photos

Artificial intelligence can also identify child sexual abuse material through services like Microsoft’s Photo DNA and Google’s Hash Matching API. Those services use a library of known child sexual abuse content to identify that content when it’s being circulated or stored online.

“However, that only solves the issue of known content or fuzzy matches, and though some companies are building models to catch new child sexual abuse material, there are significant regulations as to who can access these data sets,” said Joshua Buxbaum, co-founder and chief growth officer at WebPurify, a cloud-based web filtering, and online child protection service, in Irvine, Calif.

“As with all AI models, nothing is by any means perfect, and humans are needed in the loop to verify the content, which is not ideal from a mental health perspective,” he told TechNewsWorld.

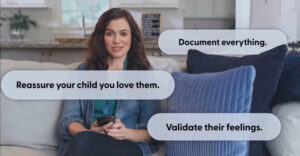

There are also standalone parental control applications, like Canopy, that can filter incoming and outgoing images that contain nudity. “It alerts parents if it seems their children are sexting,” said Canopy CMO Yaron Litwin.

“This can prevent those images from falling into the wrong hands and gives parents a chance to educate their children on the dangers of what they are doing before it is too late,” he told TechNewsWorld.

“Social platforms allow predators to send photos while also requesting photos from the minor,” Chris Hauk, consumer privacy champion at Pixel Privacy, a publisher of consumer security and privacy guides, told TechNewsWorld. “This allows predators to extort money or other types of photos while threatening to tell parents if the child doesn’t comply.”

Litwin added that “sextortion” — using nude images of children to extort more images or money from them — has increased 322% year-over-year in February.

“Some sextortion cases have resulted in the victims committing suicide,” he said.

Digital Education Vital for Child Protection

Child sexual abuse material also continues to be a problem in social media.

“Our analysis shows social media account removals related to child abuse and safety are steadily rising on most platforms,” Paul Bischoff, privacy advocate at Comparitech, a reviews, advice, and information website for consumer security products, told TechNewsWorld.

Related: Tech Coalition Launches Initiative To Crackdown on Nomadic Child Predators | Nov. 8, 2023

He cited a report released by Comparitech that found that during the first nine months of 2022, content removals for child exploitation almost equaled all removals for 2021. Similar storylines for Instagram, TikTok, Snapchat, and Discord could be found.

“This is a really serious issue that is only getting worse over time,” Litwin said. “Parents today didn’t grow up in the same digital world as their children and aren’t always aware of the range of threats their kids face on a daily basis.”

Isabelle Vladoiu, founder of the US Institute of Diplomacy and Human Rights, a nonprofit think tank in Washington, D.C., asserted that the most important thing that can be done to keep kids safe online is to educate them.

“By providing comprehensive digital literacy programs, such as digital citizenship training, raising awareness about online risks, and teaching children to recognize red flags, we empower them to make informed decisions and protect themselves from exploitation,” she told TechNewsWorld.

“Real-life examples have shown that when children are educated about trafficking risks and online safety, they become more empowered to stand against torture and exploitation,” she continued, “fostering a safer online environment for all.”